How to Tell if Something is AI-Written

It is easy for me, with aphantasia

You have a “feeling” that a piece of writing is AI-written. It sounds like word salad, a mashup of textual patterns that gestures toward meaning but isn’t actually anchored in reality.

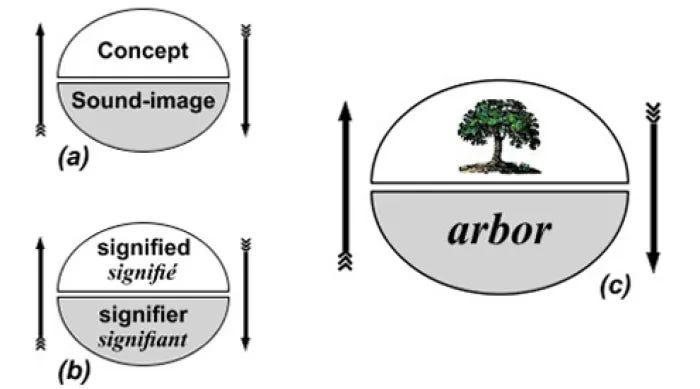

The reason for all this is how language models work. Briefly, for humans, language unites a signifier (a word, like “tree”) with a signified (an actual or imagined tree). This relationship is arbitrary, though grounded in social convention and human experience. When we use language we are trusting in and building a shared understanding. When someone says or writes “tree” (or arbre, in French) a tree might spring to mind, even if it is wildly different kind of tree than was meant.

Large Language Models (LLMs), however, only operate in the realm of signifiers. There are no signifieds. An LLM generates text through a process called autoregression—it predicts the next word in a sequence based on all the words that came before it. If you give it the phrase, “The cat sat on the…” it analyzes the pattern and predicts “mat” or “chair” as a statistically probable next word. Always keep in mind there is no cat, there is no chair. Autoregressive generation means the AI predicts one word (or token) at a time, using all the previously generated words as context for what should come next. Each new word depends on everything that came before it.

Generally, human writing moves from experience or imagination or observation to linguistic expression. You start with signifieds (concepts, experiences, ideas) and select signifiers (words) to express them. You see or imagine a red chair and write, “That chair is red.” An LLM, lacking any experience of chairs or redness, might produce this instead:

When considering furniture aesthetics, it’s important to note that color plays a significant role in both visual appeal and functional design considerations. Red, as a warm tone, can create dynamic focal points while also presenting challenges in terms of spatial harmony.

Look closely and see what’s going on. AI moves from textual pattern to textual pattern. It has learned that “red” co-occurs with words like “warm,” “dynamic,” “visual appeal” in its training data, and “furniture” connects to “aesthetics,” “design,” “functional.” So it produces plausible-sounding text about the concept of red chairs without ever having seen one.

Ask yourself: did anything spring to mind the way a red chair might have? You can probably picture a chair, but not “furniture aesthetics.” You can imagine “red” but not “functional design considerations” or “dynamic focal points” easily.

So here’s a handy rule: if you can’t see anything, if nothing springs to mind, it’s probably AI. I say this even with aphantasia, as someone who, to compensate, has long invested words (signifiers) with extra energy that I can somehow sense without seeing.

Telltale Contrasting Structures

Another rule: look for formulations like “it’s not just X, but also Y” or “rather than A, we should focus on B.” This structure is a form of computational hedging. Because an LLM only knows the relationships between words, not between words and the world, it wants to avoid falsifiable claims. (I’m saying want here as a joke but it helps to see LLMs as wafflers.) By being all balance-y it can sound comprehensive without committing to anything.

An LLM navigates what researchers call “conceptual space,” the network of relationships between ideas as they appeared in training text. This is different than the network of relationships between ideas and reality we struggle with. LLMs live entirely within the realm of signifiers, tracking statistical relationships between word-forms without access to the signifieds that anchor language to human experience.

Again, you may notice that no specific idea or image or word springs to mind when you’re reading AI-generated words. You can see this in phrases like

“It’s not only efficiency that matters, but also stakeholder engagement.”

“Rather than focusing on obstacles, we should embrace transformative opportunities.”

Effective collaboration requires not only interpersonal care but also the strategic navigation of team synergy.

Nothing comes to mind here, even for those of us without a mind’s eye. There is no shared signified for “obstacles” or “interpersonal challenges”

Just for fun, this is how a human might use the same construction:

“It’s not that I’m cheap, it’s that the restaurant had too many forks.”

“Instead of apologizing, she brought donuts.”

“His idea of teamwork was to circle my title and draw a sad face.”

When humans write this way, you have to work a little to understand (or half understand). You can easily see forks, donuts, a sad face, even if you have to ask what they signify in those sentences. You never have to work with LLMs. They play it safe. They also want to sound sophisticated and comprehensive.

Look for unnaturally perfect balance, where every point has a counterpoint, every advantage has a corresponding challenge mentioned. That’s AI.

Look for the absence of concrete details. AI-generated text about education might discuss “preparing students for the future workforce” and “developing critical thinking skills,” but it rarely mentions specific students, particular classroom moments, or concrete pedagogical challenges (like no pencils). The language floats at the level of institutional abstractions because that’s all the model has.

LLMs can, when asked, produce what feels like a personal narrative until you poke at it. A human personal narrative is full irrelevant details and random memories. There is mess and uncertainty and sometimes cringe. LLM anecdotes serve the argument too neatly.

LLMs are best at genres that rely on recombining established textual patterns rather than expressing original insight.

Satire, for example, is a matter of inverting familiar tropes and arguments. AI can take established satirical frameworks (“if we applied this logic to X”) and systematically work through them. Think of Swift’s “A Modest Proposal.” When AI does some version of this, you get mechanical cleverness. The humor feels “performed.” Pay special attention to satirical pieces that feel smooth without evoking images.

Similarly, AI can be good at persuasion because it deploys learned rhetorical patterns without needing to believe the argument. It’s like the person on the debate team who can argue any side and you aren’t really sure what he believes. AI has access to the entire repository of successful persuasive language ever spoken. All it needs to do is recombine them convincingly. But are you persuaded?

And of course, AI is good at annual reports and corporate communications. Corporate language already sounds like boilerplate for expressing optimism, acknowledging challenges, and projecting confidence. AI can produce text dense with buzzwords and noun clusters like “stakeholder engagement,” “innovative solutions,” “inclusive excellence,” and “strategic initiatives” appearing within the same paragraph. You won’t be able to “see” any of these concepts.

Test Your Suspicion!

The best way to test whether a text is AI-generated is feed it into an AI and ask. AI is happy to recognize AI-generated text if you ask nicely. Or even if you don’t.

Ask: does it seem like a specific human wrote this, or does it read like an institutional voice that could represent anyone and no one?

Understanding these markers helps distinguish between human communication— grounded in experience, marked by genuine uncertainty, and expressed through individual voice—and AI output that manipulates sophisticated linguistic patterns without underlying comprehension or authentic perspective. As we navigate this evolving landscape, it is essential to recognize both the opportunities and challenges presented by AI-generated content. By fostering awareness, encouraging dialogue among stakeholders, and implementing thoughtful strategies for responsible integration, we can ensure that technological innovation serves to enhance rather than diminish authentic communication. Ultimately, the future will depend not only on our ability to leverage these tools effectively, but also on our commitment to preserving the creativity, empathy, and lived experience that define what it means to be human.

(Final test: is that paragraph my voice or AI’s?)

Thank you, obvious human being, for writing this so clearly. I’m reminded of what my favorite high school creative writing teacher used to write at the bottom of our angst-ridden teenaged musings in fine green felt-tipped pen: Aye, Eye, I. “Aye” for yes, you successfully communicated your point. “Eye” for you made it vivid and real for me in my mind’s eye. And “I” for I can relate to what you’ve written. LLMs wouldn’t stand a chance in Mr. Barone’s class.

“This article isn’t just brilliant—it’s brave.”

In all seriousness, this is so well said, Hollis. I’m writing something similar on what I call Telltale AI Tics. More soon!